Logistical Regression

May 03, 2020

Binary Classification

Looking to classify , a binary outcome.

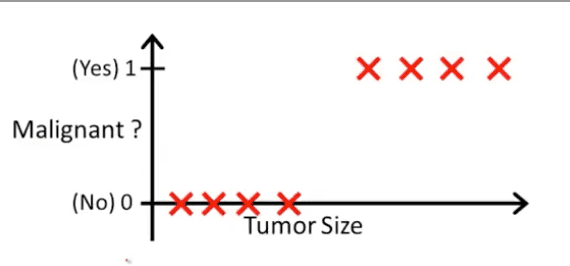

Example (Tumour Classification)

In an example like this, we could try using linear regression and use a threshold classifier such as:

- If , predict

- If , predict

However, outlier points which can skew the regression line gives us a worse hypothesis. For this reason, linear regression isn’t fantastic for binary classification.

Linear regression can also allow values to extend beyond the range , again making it a weak model for binary classification.

Introducing Logistical Regression

For this type of model, we want:

For this reason, we will need to change our definition of from what was used in linear regression. Our new hypothesis will be defined as:

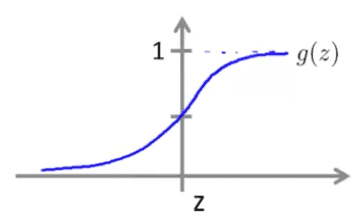

Where can be referred to as the Sigmoid or Logistic function. The full version of our hypothesis function is:

As before, we want to fit the parameters .

Interpretation of Hypothesis

When outputs a number, we will interpret it as the estimated probability that on input .

For example, if we are using the tumour example with:

i.e. the probability of is . Then we say that the patient has a chance of the tumour being malignant. More formally, we can write this as:

Since can only be either or , we can also compute the probability that

Decision Boundary

For logistic regression, we will define our hypothesis decision boundary as:

- Predict if

- Predict if

When will we end up in such cases? If we look at the sigmoid function:

- when

- when

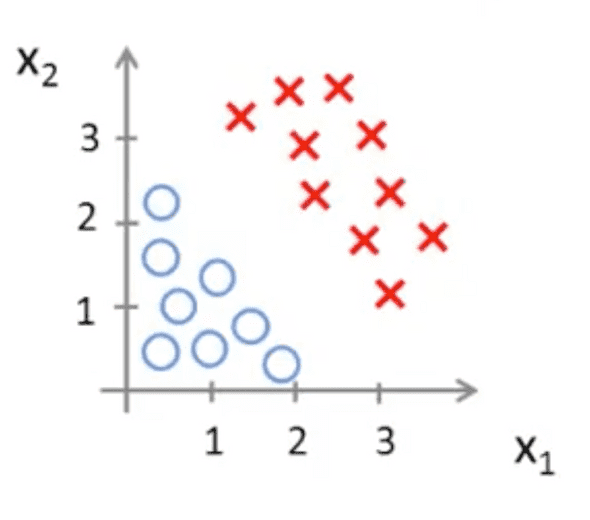

Example

Consider the following dataset:

Our hypothesis would therefore be:

Further suppose that our fitted values of theta are:

In this case, we will predict if:

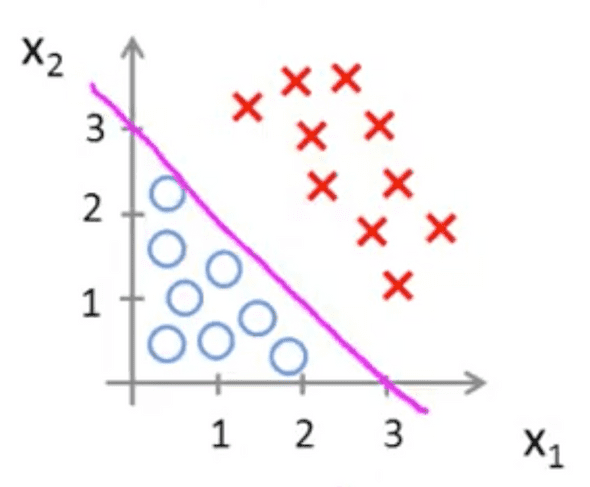

Which is an equation for the following decision boundary line:

Therefore, we will predict for any point that falls to the right of the line. Vice-versa for .

Non-linear Decision Boundary

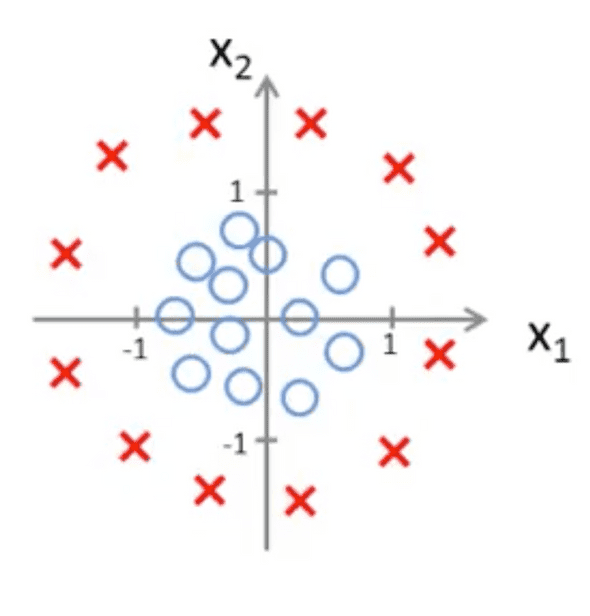

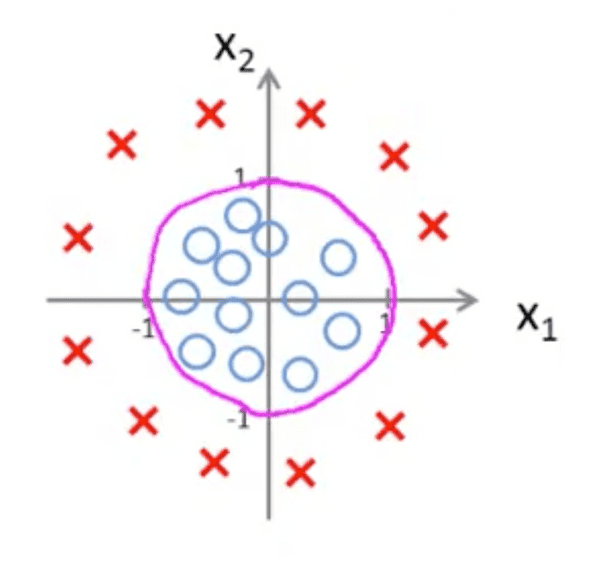

Consider the following dataset:

In linear regression, we were able to add higher order polynomials for situations like this. We can do the same with logistic regression. Suppose our hypothesis looks like:

Futher suppose that our fitted values are:

Therefore, we predict if:

This produces the following decision boundary:

You can use polynomial terms to fit decision boundaries that take on many different shapes and sizes, not just circles.